A/B testing gets talked about like it’s a magic switch for conversions. One tweak and suddenly sales skyrocket, right? In reality, most store owners run a test or two, get lost in stats or setup, and either give up or make decisions on shaky data. No wonder it feels messy.

The truth is, A/B testing works only when you approach it with a clear process. You don’t need a PhD in statistics, and you don’t need Amazon-level traffic. What you do need is a framework: what to test, how long to run it, what numbers actually matter, and how to avoid the mistakes that waste time and money.

That’s exactly what we’re going to cover in this article. We’ll walk through how to plan and run an A/B test for your ecommerce store, from setting goals and choosing tools to reading results with confidence.

By the end, you’ll know how to stop guessing, start testing, and use data to make decisions that grow your store.

[[cta5]]

What is A/B Testing and Why It Matters for Ecommerce

Every visitor to your store is making tiny choices: click or scroll, buy or bounce. The problem is you don’t always know what’s nudging them toward checkout or pushing them away. A/B testing is how you find out.

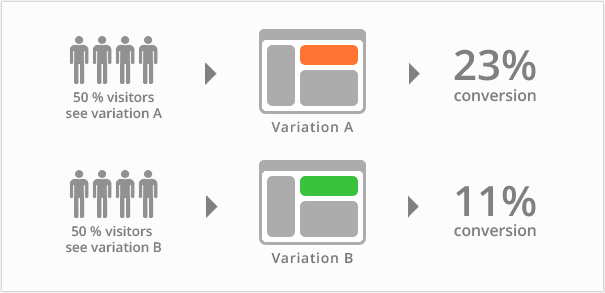

At its core, A/B testing means showing two versions of a page, element, or experience to different groups of visitors and tracking which performs better. Version A is your current setup, Version B is the change you want to test. The numbers tell you which one actually drives more sales, not your gut feeling or your designer’s opinion.

For ecommerce, this is a game-changer. Even small tweaks can have an outsized impact. Move your “Add to Cart” button above the fold and watch clicks climb. Highlight free shipping more clearly and see conversions jump. Change how you frame a discount and suddenly average order value ticks upward.

A/B testing sits at the core of ecommerce optimization strategies because it shows you which changes truly move the needle. Paired with tactics like better checkout flows, clearer product pages, and stronger offers, it creates a systematic way to refine the entire customer journey.

The biggest win? Confidence. Instead of wondering if a redesign helped or hurt, you know. Instead of arguing in meetings, you have proof.

How to Plan and Set Up Your A/B Test

A good A/B test starts before you ever touch a tool. The planning stage is where you decide what you’re testing, why you’re testing it, and how you’ll measure success. Skipping this step is why so many tests flop.

- Define your goal and success metric

Every test needs one clear success signal. Pick the metric that matters most to your store’s bottom line: conversion rate, average order value (AOV), or checkout completion. Write it down before launch and commit.

The key here is discipline. If you start testing with five different metrics in mind, you’ll get lost in the noise. One primary metric keeps the test focused and prevents you from shifting the goalposts halfway through. Secondary metrics are fine to keep an eye on, but they’re background players at this stage.

- Build a strong hypothesis

A/B testing shouldn’t be throwing ideas at the wall to see what sticks. You need to test a reasoned prediction. A strong hypothesis connects user behavior, the change you’re making, and the outcome you expect.

Bad hypothesis: “Let’s change the product photo to see if sales improve.”

Good hypothesis: “If the product photo shows the bag worn by a model instead of flat-lay, visitors will better visualize size and style, which will increase add-to-cart clicks.”

The second version works because:

- It’s based on user behavior (shoppers may be confused about product scale).

- It defines the variable (product photo style).

- It sets an outcome (higher add-to-cart rate).

This level of clarity makes analysis much easier later. Your test either confirms or disproves the hypothesis. Either way, you learn something valuable.

- Test one element at a time

One of the most common A/B testing mistakes is treating it like a redesign. If you change multiple things at once (new headline, new button, new layout) you won’t know which part drove the result.

Keep your tests laser-focused:

- Single-variable tests: Change one thing only, like CTA copy (“Buy Now” vs. “Add to Cart”) or placement of the shipping message.

- Iterative testing: Run a series of smaller tests rather than one big “redesign vs. old site” test. It’s slower, but you’ll collect insights that stack up over time.

Yes, this requires patience, but the payoff is cleaner insights you can actually use to guide future decisions.

- Pick the right tools

The tool you use depends on your platform, your traffic, and how much complexity you’re ready to handle.

- Shopify-specific apps: If you’re running your store on Shopify and want a fast, simple setup, apps like Intelligems and Shoplift are built for you. They let you test prices, product pages, or theme elements directly in your store with minimal coding. Ideal for founders or lean teams who need to get experiments live quickly.

- All-in-one testing platforms: Tools like VWO and Zoho PageSense go beyond simple split testing. They give you a visual editor, heatmaps, funnel analysis, and even surveys all in one place. Perfect if you want to understand not only which version wins but also why without juggling multiple tools.

- Enterprise solutions: If you’re at scale and running multiple experiments across different segments, platforms like Optimizely and Adobe Target are the industry standards. They’re powerful and flexible, but they also require more budget, more setup, and often developer support. Best suited for larger ecommerce brands with dedicated optimization teams.

Whatever you choose, make sure your testing tool integrates with your analytics tools (Shopify dashboards, GA4, Klaviyo). If your tools don’t talk to each other, you’ll waste time reconciling data instead of acting on insights.

How to Run an A/B Test the Right Way

Once your hypothesis and tools are ready, it’s time to launch the experiment. This is where impatience and guesswork can ruin weeks of effort, so getting the basics right matters.

- Sample size and traffic

A/B tests only work if you have enough data to trust the outcome. The number of visitors or conversions you need depends on three factors:

- Your current baseline (e.g., if 3% of visitors buy, that’s your starting rate).

- The lift you expect to see (a 1% bump needs more data to prove than a 10% bump).

- The confidence level you want (95% confidence is the gold standard in ecommerce).

To figure it out, use free calculators from VWO, Optimizely, or Evan Miller’s classic sample size calculator. They’ll tell you how many visitors you need before you can call a result significant.

Low traffic? Don’t panic. Instead of testing micro-changes like button shades, focus on bigger shifts that are more likely to move the needle. You can also use sequential testing (evaluating results as they come in) or supplement A/B tests with qualitative data from heatmaps and surveys.

Pro tip: Run an occasional A/A test (two identical versions against each other) to make sure your tool is splitting traffic correctly and your data setup is clean.

- How long to run your test

Time is as important as traffic. Cut a test too short and you’ll see false winners. Run it too long and outside factors start polluting your results.

- Minimum: At least one full business cycle (7 days) so you capture weekday and weekend behavior.

- Typical: 2–4 weeks, depending on how much traffic you have.

- Rule: Only end the test once you’ve hit the required sample size and reached statistical significance.

Don’t stop the moment you see a variant pulling ahead. Results often swing wildly in the first few days. And don’t let tests drag for months: holiday traffic, ad campaigns, or market shifts can make the data unreliable.

- Tracking setup

Your test is only as good as the data you collect. Double-check that:

- Conversions are tracked correctly in GA4 or your ecommerce platform.

- Traffic is split evenly (50/50 or 60/40 depending on your design).

- Returning visitors always see the same version (consistency is key).

- Events like add-to-cart, checkout started, and purchase completed are firing properly.

A quick QA before launch saves you from realizing two weeks later that the “winning” variant was never tracked correctly.

How to Interpret A/B Test Results in Ecommerce

Your test has run its course, the data is in, and now comes the part that trips up most store owners: figuring out what it actually means. Numbers alone don’t give you answers unless you know which ones to focus on.

- Statistical vs. practical significance

Most testing tools will tell you when a result is “statistically significant.” That’s the mathy way of saying the outcome is very unlikely to be random chance. In ecommerce, the standard is 95% confidence.

But here’s the catch: statistical significance doesn’t always equal practical significance. If your conversion rate moves from 3.0% to 3.1%, the tool might say it’s a “win,” but does that tiny lift justify the time, effort, and cost of rolling it out? Always pair the numbers with business sense.

- Metrics that actually matter

When the test ends, it’s time to zoom out. Start with your primary metric: did conversion rate, AOV, or checkout completion improve? That’s the headline.

But don’t stop there. Secondary metrics reveal side effects that can change the story:

- Bounce rate: Did your new page turn people off? A sudden spike shows the variant pushed visitors away instead of pulling them in.

- CTR (click-through rate): Are more visitors engaging with CTAs? A higher CTR signals stronger intent, even if it doesn’t always lead to a purchase.

- Cart abandonment: Did checkout flow changes confuse or reassure buyers? Tracking this helps you spot friction and gives you data you can use to reduce cart abandonment across the store.

- Time on page: Did the update help people navigate faster or slow them down? Sometimes shorter means customers found what they needed quickly.

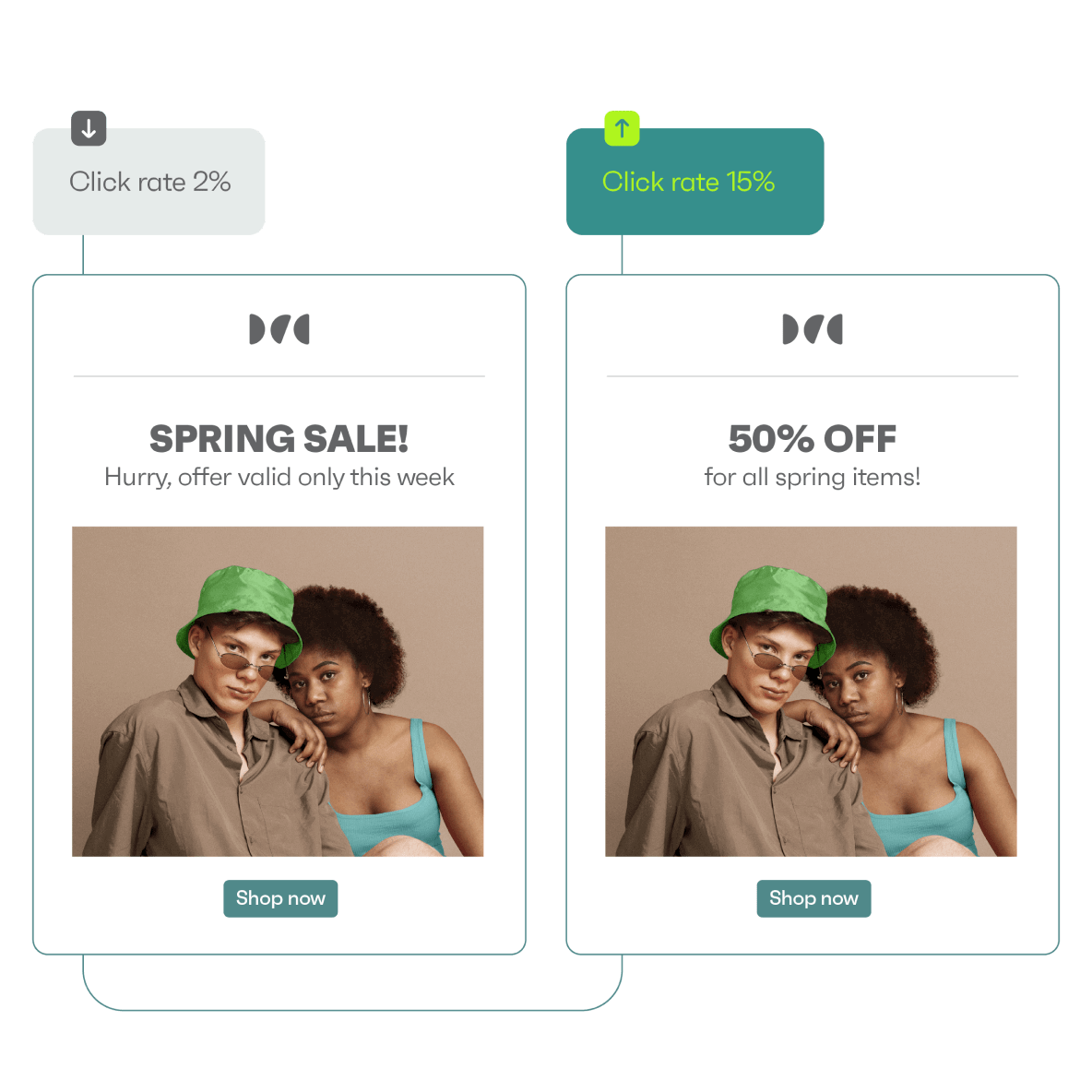

Channel context matters too. For emails, ignore inflated open rates and track clicks or conversions. For ads, ROAS and cost per conversion tell you if the change was worth the spend.

And finally, slice results by segment. Mobile vs desktop, new vs returning, paid vs organic. Sometimes a “losing” variant overall is actually a big win for one group and that insight can guide your next test. These patterns often open the door to ecommerce personalization, where different customer segments see the version that works best for them instead of a one-size-fits-all page.

- Digging deeper into your data

Don’t stop at the averages. Break down results by segment:

- Device: A test that boosts desktop sales might tank on mobile.

- New vs returning customers: Regulars may respond differently to pricing or layout shifts.

- Traffic source: Paid ad visitors might act unlike organic ones.

Segmenting gives you context and prevents bad rollouts. If your variant only wins in one group, maybe it deserves a targeted application instead of a universal change.

- Document your findings

Every test, win, lose, or neutral, teaches you something. Keep a simple log:

- Date, hypothesis, variable tested

- Sample size and duration

- Results (primary + secondary metrics)

- Key takeaways

This stops you from repeating the same test twice and builds a library of insights you can share with your team. Over time, this log becomes a roadmap of what works (and doesn’t) for your store.

Common Pitfalls to Avoid while A/B Testing

A/B testing sounds simple, but there are a few traps that waste time and muddy your results:

- Running tests during unusual traffic spikes. A holiday promo, a TikTok mention, or a one-day flash sale can flood your site with atypical visitors. Those results won’t hold once traffic normalizes.

- Letting design tools dictate the test. Visual editors are great, but if your devs can’t replicate the winning variant in production, you’ve wasted a test. Always check feasibility before you launch.

- Calling winners too early. Ending a test after a couple of days or a handful of conversions is the fastest way to fool yourself. Let it run long enough to hit statistical significance.

- Treating inconclusive results as failures. “No difference” is still valuable. It tells you not to waste resources on that change. Logging those insights sharpens future hypotheses.

- Testing too many things at once. If you change a headline, a hero image, and your CTA button all in one test, you’ll never know what actually moved the needle. Keep it clean, one variable at a time.

- Forgetting about traffic limits. Low-traffic stores need to prioritize bigger changes. Small tweaks can take months to validate, which kills momentum.

- Ignoring segments. A test that fails overall might be a win on mobile or for new visitors. Always break down results before tossing them out.

Avoid these mistakes, and your tests will go from “random experiments” to reliable growth drivers.

Conclusion

A/B testing creates confidence in your decisions.

Each experiment builds on the last, giving you real data. With a clear goal, a strong hypothesis, and disciplined tracking, you set yourself up for results you can trust.

Start with one meaningful change, run the test long enough to gather solid data, and document what you learn. Over time, those insights stack into a system that drives steady, measurable growth.

Testing is not a one-time project. It is an ongoing practice that shapes your store into something sharper, stronger, and more profitable with every cycle.

[[cta5]]

.avif)

.avif)

.jpg)